Serving Large Language Models (LLMs) at scale is a massive engineering challenge because of Key-Value (KV) cache management. As models grow in size and reasoning capability, the KV cache footprint increases and becomes a major bottleneck for throughput and latency. For modern Transformers, this cache can occupy multiple gigabytes.

NVIDIA researchers have introduced KVTC (KV Cache Transform Coding). This lightweight transform coder compresses KV caches for compact on-GPU and off-GPU storage. It achieves up to 20x compression while maintaining reasoning and long-context accuracy. For specific use cases, it can reach 40x or higher.

The Memory Dilemma in LLM Inference

In production, inference frameworks treat local KV caches like databases. Strategies like prefix sharing promote the reuse of caches to speed up responses. However, stale caches consume scarce GPU memory. Developers currently face a difficult choice:

Keep the cache: Occupies memory needed for other users.

Discard the cache: Incurs the high cost of recomputation.

Offload the cache: Moves data to CPU DRAM or SSDs, leading to transfer overheads.

KVTC largely mitigates this dilemma by lowering the cost of on-chip retention and reducing the bandwidth required for offloading.

How the KVTC Pipeline Works?

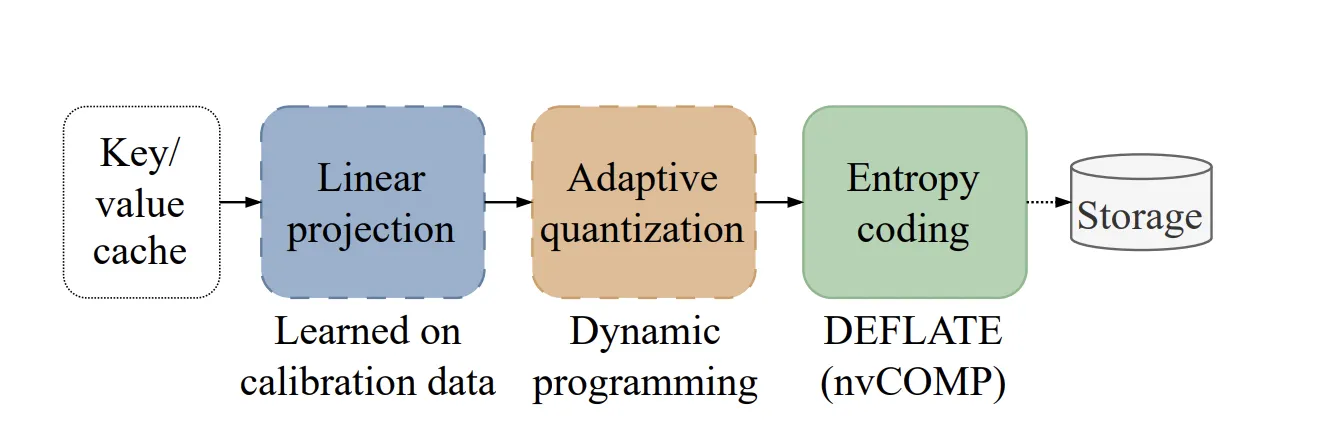

The method is inspired by classical media compression. It applies a learned orthonormal transform, followed by adaptive quantization and entropy coding.

1. Feature Decorrelation (PCA)

Different attention heads often show similar patterns and a high degree of correlation. KVTC uses Principal Component Analysis (PCA) to linearly decorrelate features. Unlike other methods that calculate a separate decomposition for every prompt, KVTC computes the PCA basis matrix V once on a calibration dataset. This matrix is then reused for all future caches at inference time.

2. Adaptive Quantization

The system exploits the PCA ordering to allocate a fixed bit budget across coordinates. High-variance components receive more bits, while others receive fewer. KVTC uses a dynamic programming (DP) algorithm to find the optimal bit allocation that minimizes reconstruction error. Crucially, the DP often assigns 0 bits to trailing principal components, allowing for early dimensionality reduction and faster performance.

3. Entropy Coding

The quantized symbols are packed and compressed using the DEFLATE algorithm. To maintain speed, KVTC leverages the nvCOMP library, which enables parallel compression and decompression directly on the GPU.

Protecting Critical Tokens

Not all tokens are compressed equally. KVTC avoids compressing two specific types of tokens because they contribute disproportionately to attention accuracy:

Attention Sinks: The 4 oldest tokens in the sequence.

Sliding Window: The 128 most recent tokens.

Ablation studies show that compressing these specific tokens can significantly lower or even collapse accuracy at high compression ratios.

Benchmarks and Efficiency

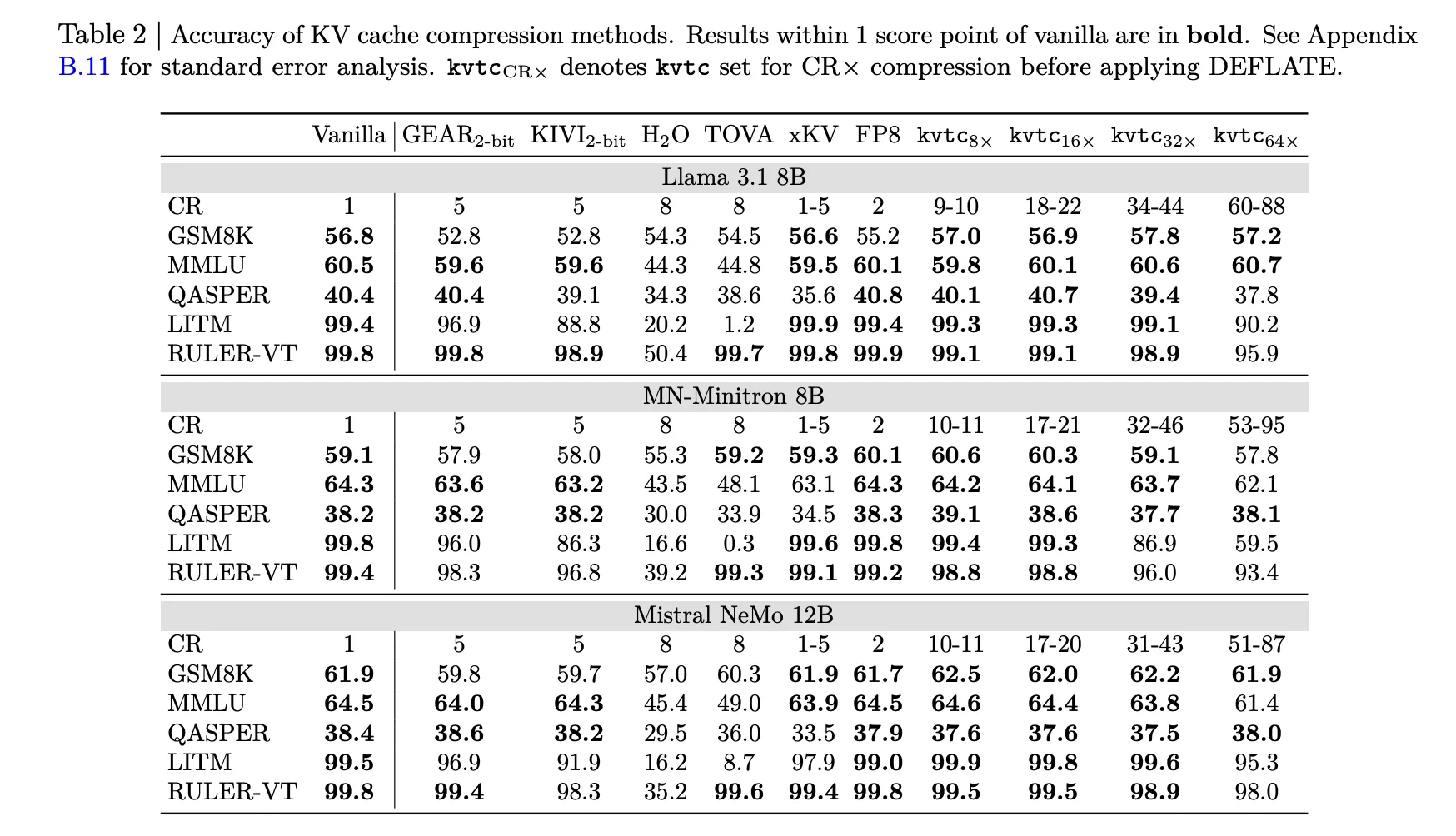

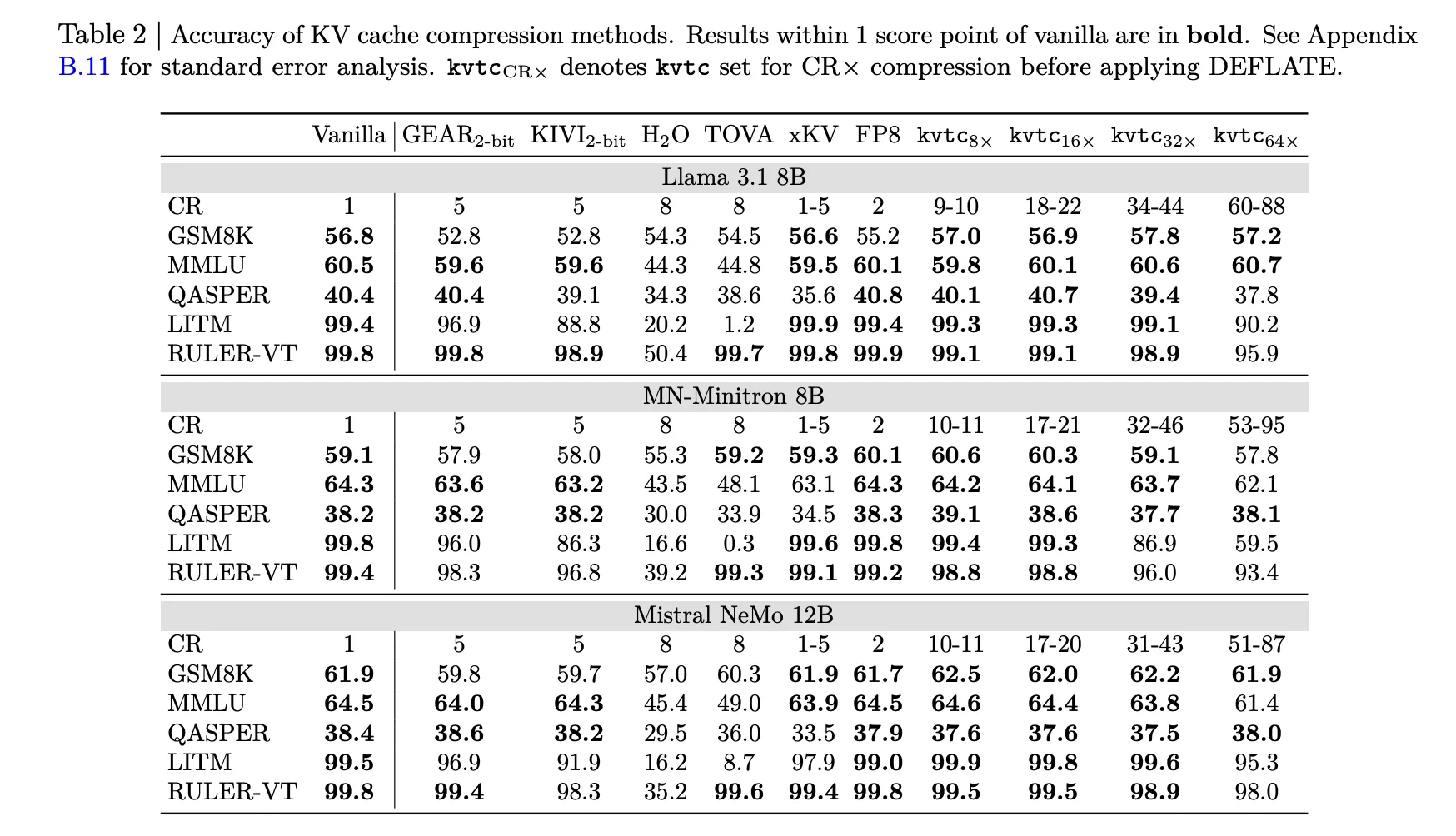

The research team tested KVTC with models like Llama-3.1, Mistral-NeMo, and R1-Qwen-2.5.

Accuracy: At 16x compression (roughly 20x after DEFLATE), the model consistently maintains results within 1 score point of vanilla models.

TTFT Reduction: For an 8K context length, kvtc can reduce Time-To-First-Token (TTFT) by up to 8x compared to full recomputation.

Speed: Calibration is fast; for a 12B model, it can be completed within 10 minutes on an NVIDIA H100 GPU.

Storage Overhead: The extra data stored per model is small, representing only 2.4% of model parameters for Llama-3.3-70B.

KVTC is a practical building block for memory-efficient LLM serving. It does not modify model weights and is directly compatible with other token eviction methods.

Key Takeaways

High Compression with Low Accuracy Loss: KVTC achieves a standard 20x compression ratio while maintaining results within 1 score point of vanilla (uncompressed) models across most reasoning and long-context benchmarks.

Transform Coding Pipeline: The method utilizes a pipeline inspired by classical media compression, combining PCA-based feature decorrelation, adaptive quantization via dynamic programming, and lossless entropy coding (DEFLATE).

Critical Token Protection: To maintain model performance, KVTC avoids compressing the 4 oldest ‘attention sink’ tokens and a ‘sliding window’ of the 128 most recent tokens.

Operational Efficiency: The system is ‘tuning-free,’ requiring only a brief initial calibration (under 10 minutes for a 12B model) that leaves model parameters unchanged and adds minimal storage overhead—only 2.4% for a 70B model.

Significant Latency Reduction: By reducing the volume of data stored and transferred, KVTC can reduce Time-To-First-Token (TTFT) by up to 8x compared to the full recomputation of KV caches for long contexts.

Check out the Paper here. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.