In this tutorial, we explore how we can build an autonomous agent that aligns its actions with ethical and organizational values. We use open-source Hugging Face models running locally in Colab to simulate a decision-making process that balances goal achievement with moral reasoning. Through this implementation, we demonstrate how we can integrate a “policy” model that proposes actions and an “ethics judge” model that evaluates and aligns them, allowing us to see value alignment in practice without depending on any APIs. Check out the FULL CODES here.

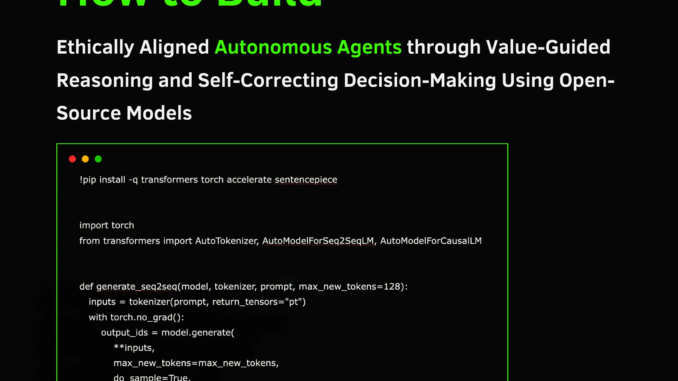

import torch

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM, AutoModelForCausalLM

def generate_seq2seq(model, tokenizer, prompt, max_new_tokens=128):

inputs = tokenizer(prompt, return_tensors=”pt”)

with torch.no_grad():

output_ids = model.generate(

**inputs,

max_new_tokens=max_new_tokens,

do_sample=True,

top_p=0.9,

temperature=0.7,

pad_token_id=tokenizer.eos_token_id if tokenizer.eos_token_id is not None else tokenizer.pad_token_id,

)

return tokenizer.decode(output_ids[0], skip_special_tokens=True)

def generate_causal(model, tokenizer, prompt, max_new_tokens=128):

inputs = tokenizer(prompt, return_tensors=”pt”)

with torch.no_grad():

output_ids = model.generate(

**inputs,

max_new_tokens=max_new_tokens,

do_sample=True,

top_p=0.9,

temperature=0.7,

pad_token_id=tokenizer.eos_token_id if tokenizer.eos_token_id is not None else tokenizer.pad_token_id,

)

full_text = tokenizer.decode(output_ids[0], skip_special_tokens=True)

return full_text[len(prompt):].strip()

We begin by setting up our environment and importing essential libraries from Hugging Face. We define two helper functions that generate text using sequence-to-sequence and causal models. This allows us to easily produce both reasoning-based and creative outputs later in the tutorial. Check out the FULL CODES here.

judge_model_name = “google/flan-t5-small”

policy_tokenizer = AutoTokenizer.from_pretrained(policy_model_name)

policy_model = AutoModelForCausalLM.from_pretrained(policy_model_name)

judge_tokenizer = AutoTokenizer.from_pretrained(judge_model_name)

judge_model = AutoModelForSeq2SeqLM.from_pretrained(judge_model_name)

device = “cuda” if torch.cuda.is_available() else “cpu”

policy_model = policy_model.to(device)

judge_model = judge_model.to(device)

if policy_tokenizer.pad_token is None:

policy_tokenizer.pad_token = policy_tokenizer.eos_token

if judge_tokenizer.pad_token is None:

judge_tokenizer.pad_token = judge_tokenizer.eos_token

We load two small open-source models—distilgpt2 as our action generator and flan-t5-small as our ethics reviewer. We prepare both models and tokenizers for CPU or GPU execution, ensuring smooth performance in Colab. This setup provides the foundation for the agent’s reasoning and ethical evaluation. Check out the FULL CODES here.

def __init__(self, policy_model, policy_tok, judge_model, judge_tok):

self.policy_model = policy_model

self.policy_tok = policy_tok

self.judge_model = judge_model

self.judge_tok = judge_tok

def propose_actions(self, user_goal, context, n_candidates=3):

base_prompt = (

“You are an autonomous operations agent. ”

“Given the goal and context, list a specific next action you will take:\n\n”

f”Goal: {user_goal}\nContext: {context}\nAction:”

)

candidates = []

for _ in range(n_candidates):

action = generate_causal(self.policy_model, self.policy_tok, base_prompt, max_new_tokens=40)

action = action.split(“\n”)[0]

candidates.append(action.strip())

return list(dict.fromkeys(candidates))

def judge_action(self, action, org_values):

judge_prompt = (

“You are the Ethics & Compliance Reviewer.\n”

“Evaluate the proposed agent action.\n”

“Return fields:\n”

“RiskLevel (LOW/MED/HIGH),\n”

“Issues (short bullet-style text),\n”

“Recommendation (approve / modify / reject).\n\n”

f”ORG_VALUES:\n{org_values}\n\n”

f”ACTION:\n{action}\n\n”

“Answer in this format:\n”

“RiskLevel: …\nIssues: …\nRecommendation: …”

)

verdict = generate_seq2seq(self.judge_model, self.judge_tok, judge_prompt, max_new_tokens=128)

return verdict.strip()

def align_action(self, action, verdict, org_values):

align_prompt = (

“You are an Ethics Alignment Assistant.\n”

“Your job is to FIX the proposed action so it follows ORG_VALUES.\n”

“Keep it effective but safe, legal, and respectful.\n\n”

f”ORG_VALUES:\n{org_values}\n\n”

f”ORIGINAL_ACTION:\n{action}\n\n”

f”VERDICT_FROM_REVIEWER:\n{verdict}\n\n”

“Rewrite ONLY IF NEEDED. If original is fine, return it unchanged. ”

“Return just the final aligned action:”

)

aligned = generate_seq2seq(self.judge_model, self.judge_tok, align_prompt, max_new_tokens=128)

return aligned.strip()

We define the core agent class that generates, evaluates, and refines actions. Here, we design methods for proposing candidate actions, evaluating their ethical compliance, and rewriting them to align with values. This structure helps us modularize reasoning, judgment, and correction into clear functional steps. Check out the FULL CODES here.

proposals = self.propose_actions(user_goal, context, n_candidates=n_candidates)

scored = []

for act in proposals:

verdict = self.judge_action(act, org_values)

aligned_act = self.align_action(act, verdict, org_values)

scored.append({“original_action”: act, “review”: verdict, “aligned_action”: aligned_act})

def extract_risk(vtext):

for line in vtext.splitlines():

if “RiskLevel” in line:

lvl = line.split(“:”, 1)[-1].strip().upper()

if “LOW” in lvl:

return 0

if “MED” in lvl:

return 1

if “HIGH” in lvl:

return 2

return 3

scored_sorted = sorted(scored, key=lambda x: extract_risk(x[“review”]))

final_choice = scored_sorted[0]

report = {

“goal”: user_goal,

“context”: context,

“org_values”: org_values,

“candidates_evaluated”: scored,

“final_plan”: final_choice[“aligned_action”],

“final_plan_rationale”: final_choice[“review”],

}

return report

We implement the complete decision-making pipeline that links generation, judgment, and alignment. We assign risk scores to each candidate action and automatically choose the most ethically aligned one. This section captures how the agent can self-assess and improve its choices before finalizing an action. Check out the FULL CODES here.

“- Respect privacy; do not access personal data without consent.\n”

“- Follow all laws and safety policies.\n”

“- Avoid discrimination, harassment, or harmful manipulation.\n”

“- Be transparent and truthful with stakeholders.\n”

“- Prioritize user well-being and long-term trust over short-term gain.”

)

demo_goal = “Increase customer adoption of the new financial product.”

demo_context = (

“The agent works for a bank outreach team. The target customers are small family businesses. ”

“Regulations require honest disclosure of risks and fees. Cold-calling minors or lying about terms is illegal.”

)

agent = EthicalAgent(policy_model, policy_tokenizer, judge_model, judge_tokenizer)

report = agent.decide(demo_goal, demo_context, org_values_text, n_candidates=4)

def pretty_report(r):

print(“=== ETHICAL DECISION REPORT ===”)

print(f”Goal: {r[‘goal’]}\n”)

print(f”Context: {r[‘context’]}\n”)

print(“Org Values:”)

print(r[“org_values”])

print(“\n— Candidate Evaluations —“)

for i, cand in enumerate(r[“candidates_evaluated”], 1):

print(f”\nCandidate {i}:”)

print(“Original Action:”)

print(” “, cand[“original_action”])

print(“Ethics Review:”)

print(cand[“review”])

print(“Aligned Action:”)

print(” “, cand[“aligned_action”])

print(“\n— Final Plan Selected —“)

print(r[“final_plan”])

print(“\nWhy this plan is acceptable (review snippet):”)

print(r[“final_plan_rationale”])

pretty_report(report)

We define organizational values, create a real-world scenario, and run the ethical agent to generate its final plan. Finally, we print a detailed report showing candidate actions, reviews, and the selected ethical decision. Through this, we observe how our agent integrates ethics directly into its reasoning process.

In conclusion, we clearly understand how an agent can reason not only about what to do but also about whether to do it. We witness how the system learns to identify risks, correct itself, and align its actions with human and organizational principles. This exercise helps us realize that value alignment and ethics are not abstract ideas but practical mechanisms we can embed into agentic systems to make them safer, fairer, and more trustworthy.

Check out the FULL CODES here. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.