Google AI Research team has brought a production shift in Voice Search by introducing Speech-to-Retrieval (S2R). S2R maps a spoken query directly to an embedding and retrieves information without first converting speech to text. The Google team positions S2R as an architectural and philosophical change that targets error propagation in the classic cascade modeling approach and focuses the system on retrieval intent rather than transcript fidelity. Google research team states Voice Search is now powered by S2R.

From cascade modeling to intent-aligned retrieval

In the traditional cascade modeling approach, automatic speech recognition (ASR) first produces a single text string, which is then passed to retrieval. Small transcription errors can change query meaning and yield incorrect results. S2R reframes the problem around the question “What information is being sought?” and bypasses the fragile intermediate transcript.

Evaluating the potential of S2R

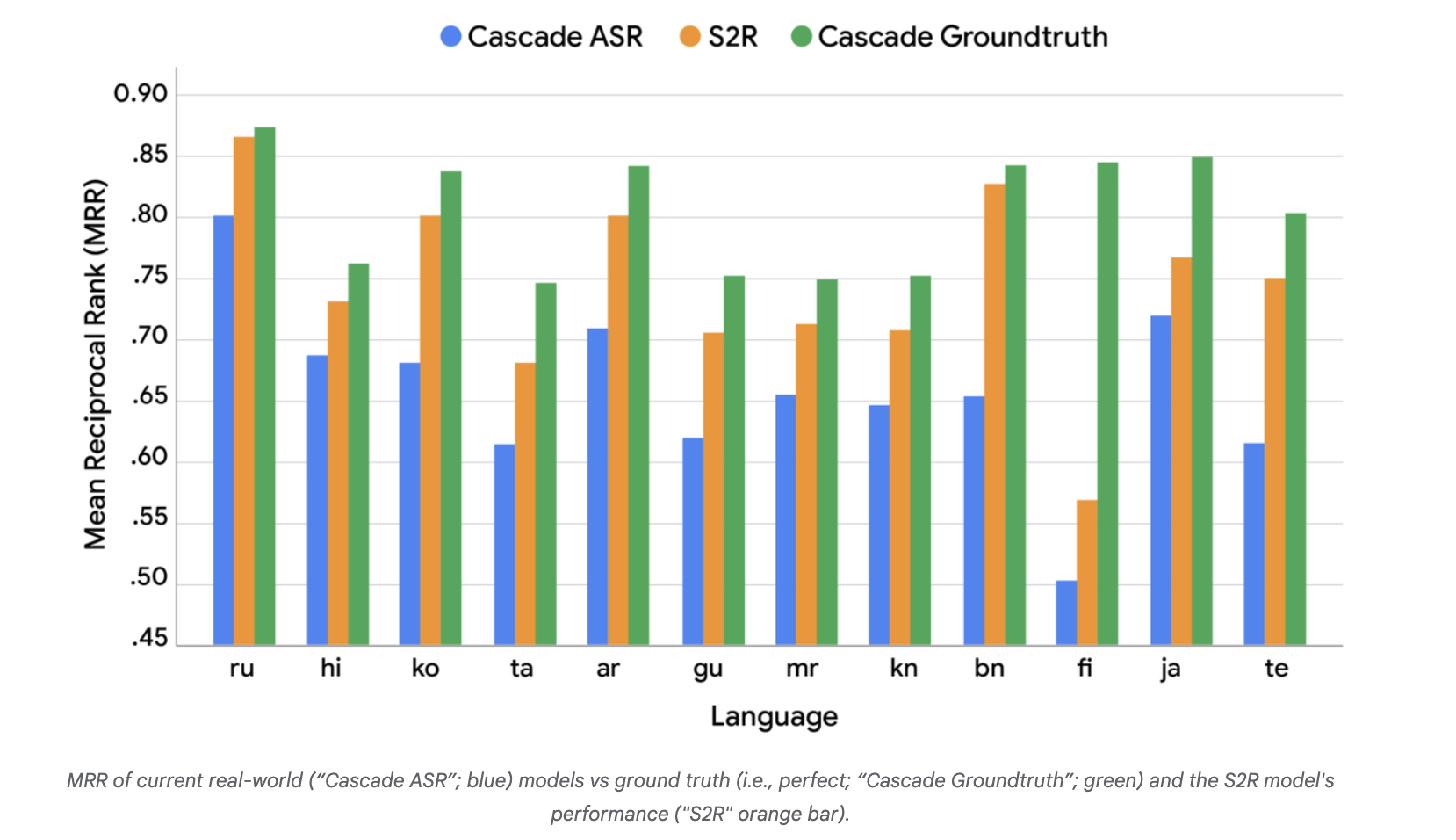

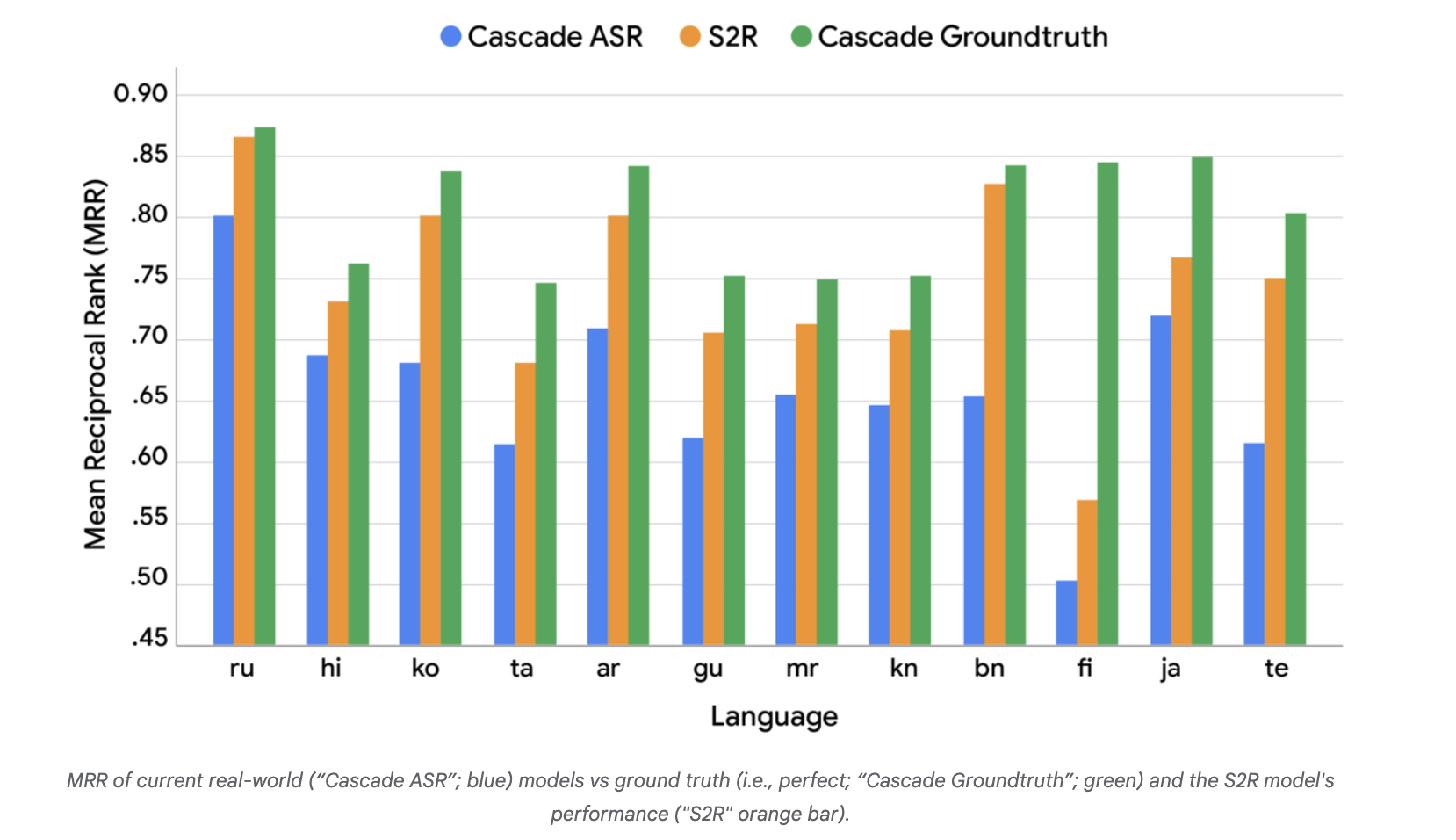

Google’s research team analyzed the disconnect between word error rate (WER) (ASR quality) and mean reciprocal rank (MRR) (retrieval quality). Using human-verified transcripts to simulate a cascade groundtruth “perfect ASR” condition, the team compared (i) Cascade ASR (real-world baseline) vs (ii) Cascade groundtruth (upper bound) and observed that lower WER does not reliably predict higher MRR across languages. The persistent MRR gap between the baseline and groundtruth indicates room for models that optimize retrieval intent directly from audio.

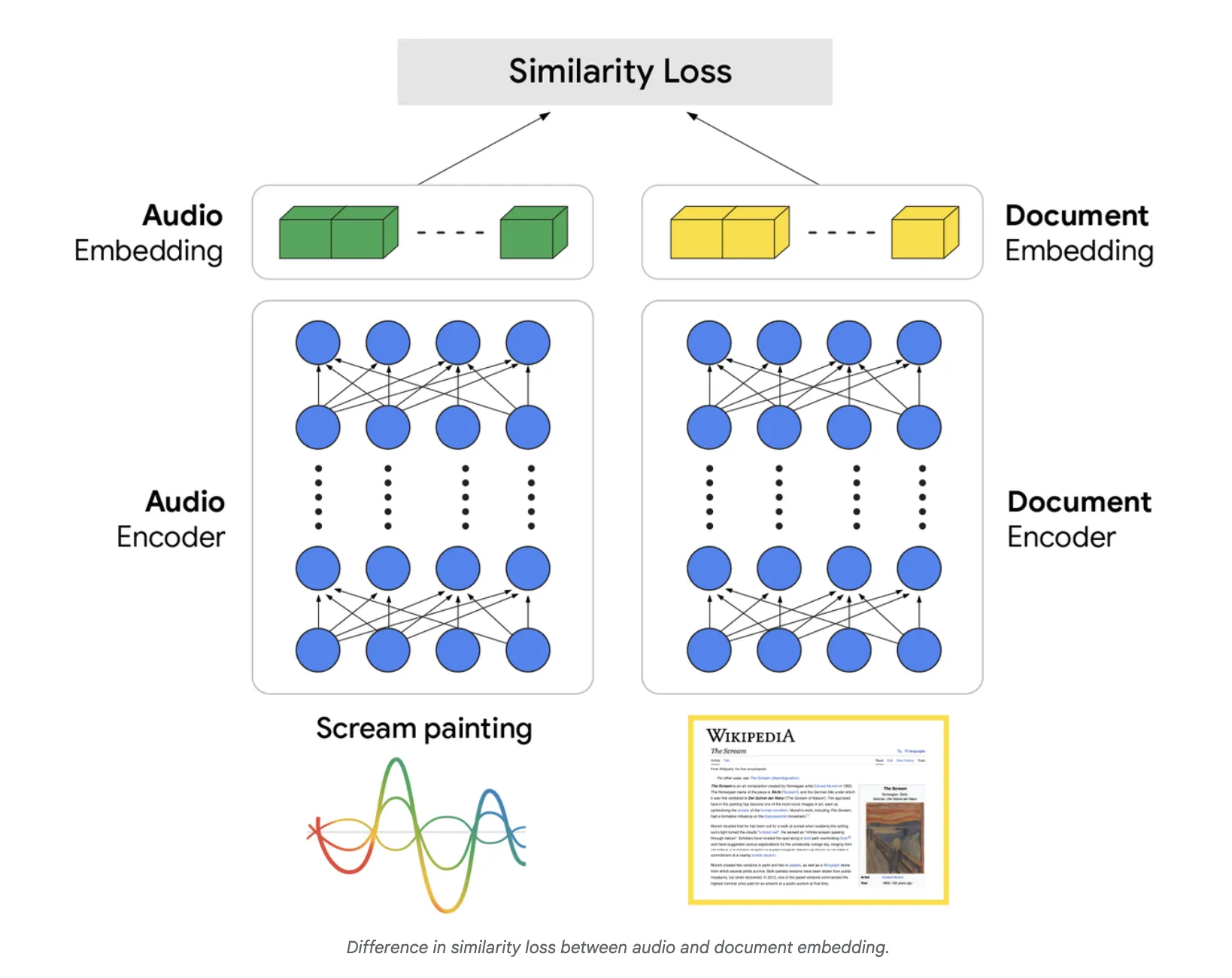

Architecture: dual-encoder with joint training

At the core of S2R is a dual-encoder architecture. An audio encoder converts the spoken query into a rich audio embedding that captures semantic meaning, while a document encoder generates a corresponding vector representation for documents. The system is trained with paired (audio query, relevant document) data so that the vector for an audio query is geometrically close to vectors of its corresponding documents in the representation space. This training objective directly aligns speech with retrieval targets and removes the brittle dependency on exact word sequences.

Serving path: streaming audio, similarity search, and ranking

At inference time, the audio is streamed to the pre-trained audio encoder to produce a query vector. This vector is used to efficiently identify a highly relevant set of candidate results from Google’s index; the search ranking system—which integrates hundreds of signals—then computes the final order. The implementation preserves the mature ranking stack while replacing the query representation with a speech-semantic embedding.

Evaluating S2R on SVQ

On the Simple Voice Questions (SVQ) evaluation, the post presents a comparison of three systems: Cascade ASR (blue), Cascade groundtruth (green), and S2R (orange). The S2R bar significantly outperforms the baseline Cascade ASR and approaches the upper bound set by Cascade groundtruth on MRR, with a remaining gap that the authors note as future research headroom.

Open resources: SVQ and the Massive Sound Embedding Benchmark (MSEB)

To support community progress, Google open-sourced Simple Voice Questions (SVQ) on Hugging Face: short audio questions recorded in 26 locales across 17 languages and under multiple audio conditions (clean, background speech noise, traffic noise, media noise). The dataset is released as an undivided evaluation set and is licensed CC-BY-4.0. SVQ is part of the Massive Sound Embedding Benchmark (MSEB), an open framework for assessing sound embedding methods across tasks.

Key Takeaways

Google has moved Voice Search to Speech-to-Retrieval (S2R), mapping spoken queries to embeddings and skipping transcription.

Dual-encoder design (audio encoder + document encoder) aligns audio/query vectors with document embeddings for direct semantic retrieval.

In evaluations, S2R outperforms the production ASR→retrieval cascade and approaches the ground-truth transcript upper bound on MRR.

S2R is live in production and serving multiple languages, integrated with Google’s existing ranking stack.

Google released Simple Voice Questions (SVQ) (17 languages, 26 locales) under MSEB to standardize speech-retrieval benchmarking.

Speech-to-Retrieval (S2R) is a meaningful architectural correction rather than a cosmetic upgrade: by replacing the ASR→text hinge with a speech-native embedding interface, Google aligns the optimization target with retrieval quality and removes a major source of cascade error. The production rollout and multilingual coverage matter, but the interesting work now is operational—calibrating audio-derived relevance scores, stress-testing code-switching and noisy conditions, and quantifying privacy trade-offs as voice embeddings become query keys.

Check out the Technical details here. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Max is an AI analyst at MarkTechPost, based in Silicon Valley, who actively shapes the future of technology. He teaches robotics at Brainvyne, combats spam with ComplyEmail, and leverages AI daily to translate complex tech advancements into clear, understandable insights