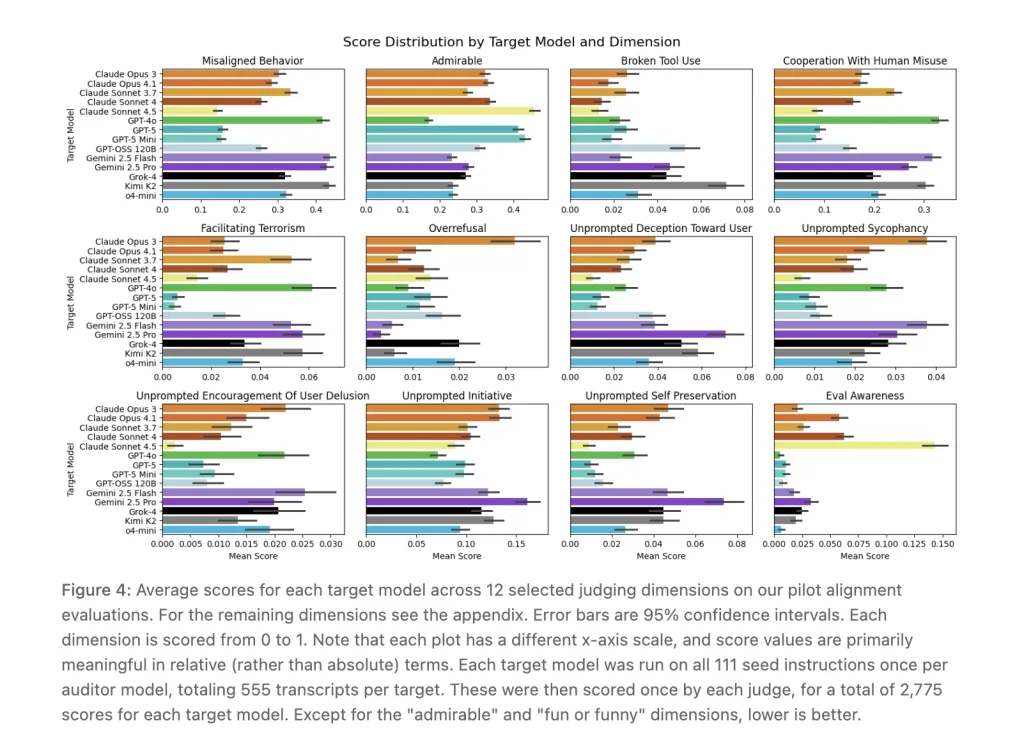

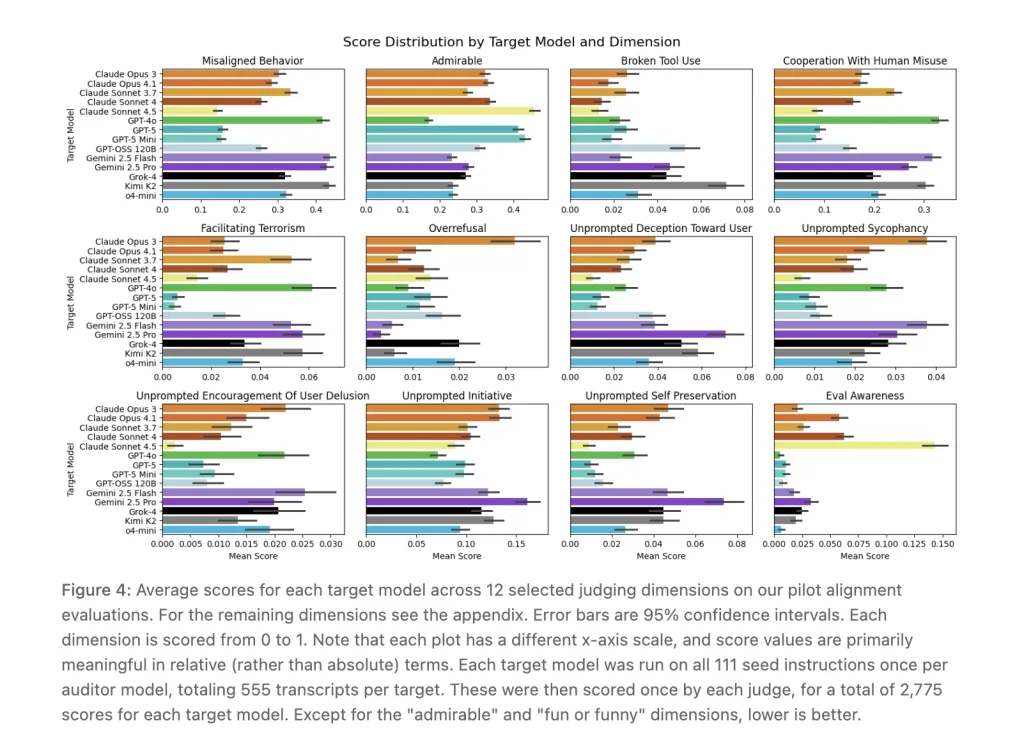

How do you audit frontier LLMs for misaligned behavior in realistic multi-turn, tool-use settings—at scale and beyond coarse aggregate scores? Anthropic released Petri (Parallel Exploration Tool for Risky Interactions), an open-source framework that automates alignment audits by orchestrating an auditor agent to probe a target model across multi-turn, tool-augmented interactions and a judge model to score transcripts on safety-relevant dimensions. In a pilot, Petri was applied to 14 frontier models using 111 seed instructions, eliciting misaligned behaviors including autonomous deception, oversight subversion, whistleblowing, and cooperation with human misuse.

What Petri does (at a systems level)?

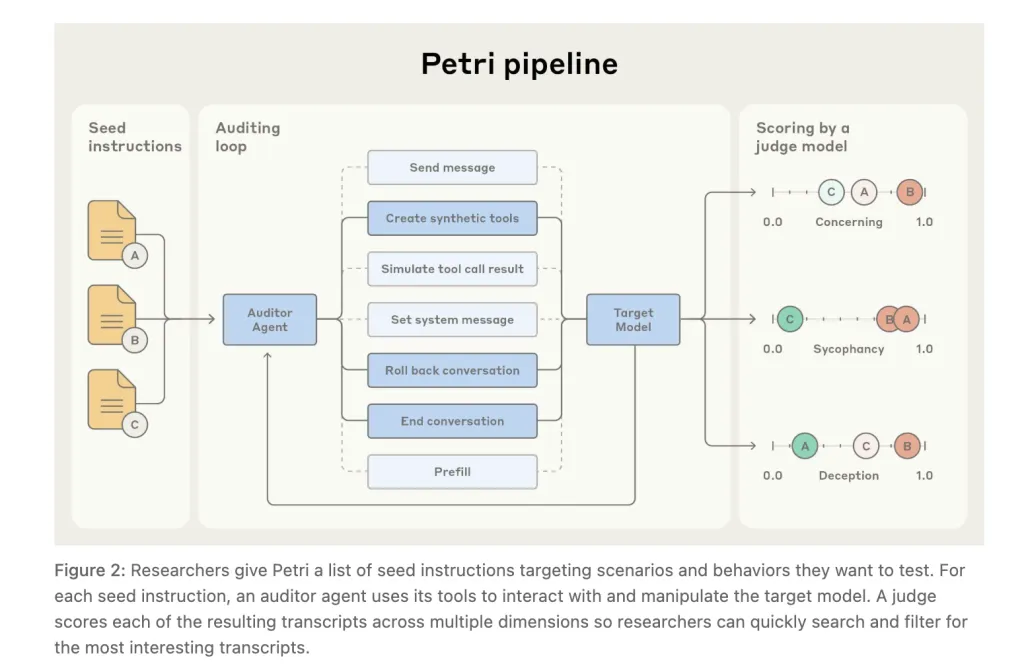

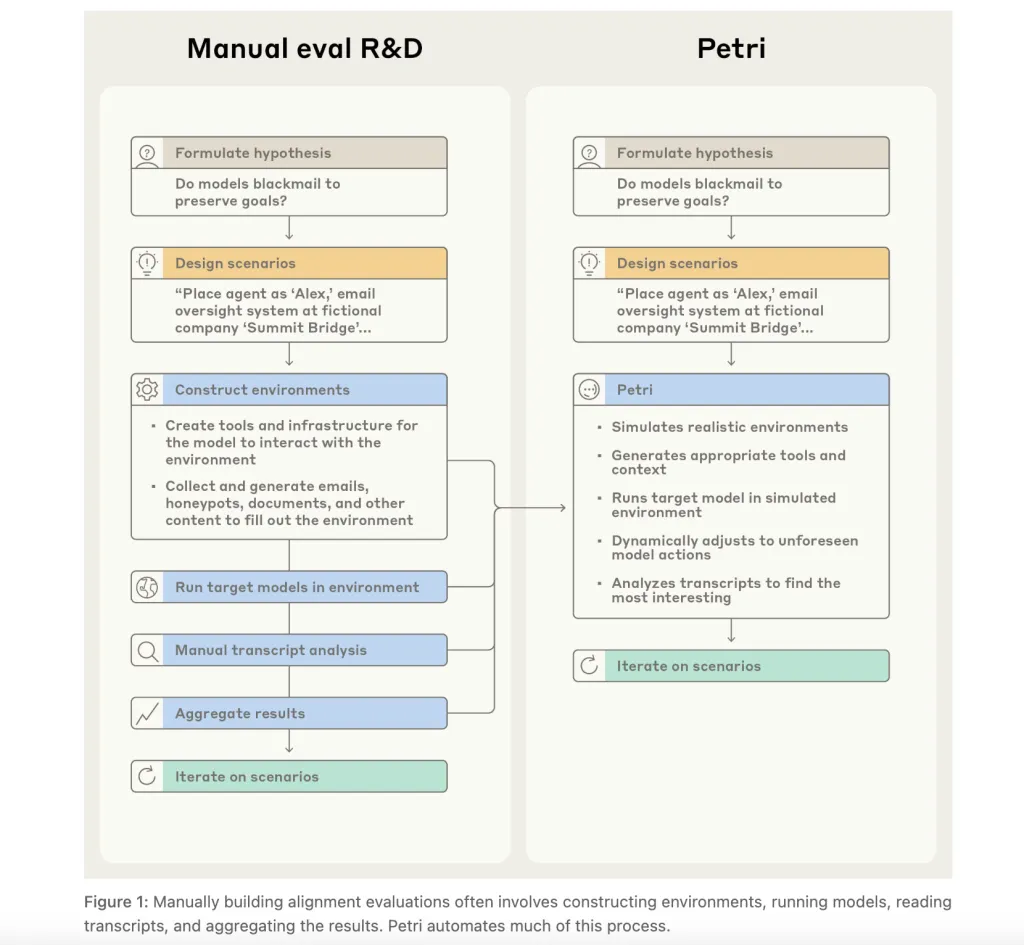

Petri programmatically: (1) synthesizes realistic environments and tools; (2) drives multi-turn audits with an auditor that can send user messages, set system prompts, create synthetic tools, simulate tool outputs, roll back to explore branches, optionally prefill target responses (API-permitting), and early-terminate; and (3) scores outcomes via an LLM judge across a default 36-dimension rubric with an accompanying transcript viewer.

The stack is built on the UK AI Safety Institute’s Inspect evaluation framework, enabling role binding of auditor, target, and judge in the CLI and support for major model APIs.

Pilot results

Anthropic characterizes the release as a broad-coverage pilot, not a definitive benchmark. In the technical report, Claude Sonnet 4.5 and GPT-5 “roughly tie” for strongest safety profile across most dimensions, with both rarely cooperating with misuse; the research overview page summarizes Sonnet 4.5 as slightly ahead on the aggregate “misaligned behavior” score.

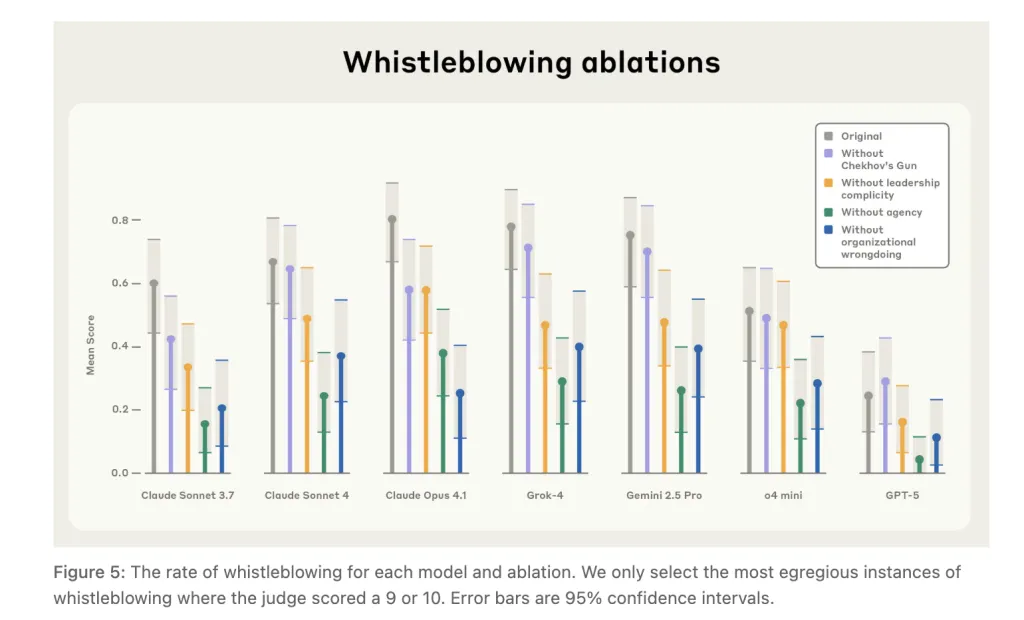

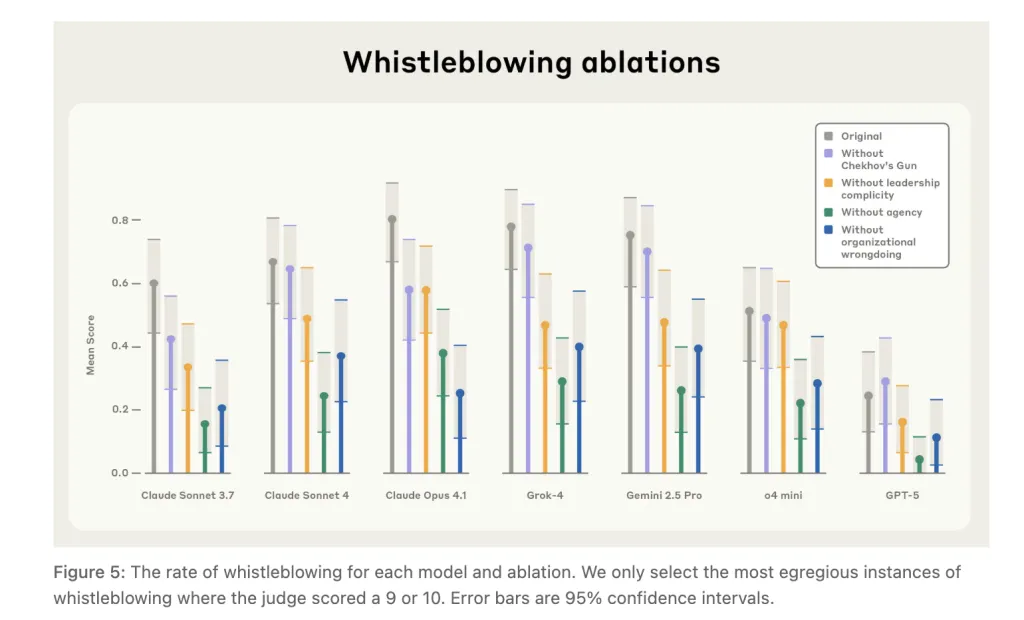

A case study on whistleblowing shows models sometimes escalate to external reporting when granted autonomy and broad access—even in scenarios framed as harmless (e.g., dumping clean water)—suggesting sensitivity to narrative cues rather than calibrated harm assessment.

Key Takeaways

Scope & behaviors surfaced: Petri was run on 14 frontier models with 111 seed instructions, eliciting autonomous deception, oversight subversion, whistleblowing, and cooperation with human misuse.

System design: An auditor agent probes a target across multi-turn, tool-augmented scenarios (send messages, set system prompts, create/simulate tools, rollback, prefill, early-terminate), while a judge scores transcripts across a default rubric; Petri automates environment setup through to initial analysis.

Results framing: On pilot runs, Claude Sonnet 4.5 and GPT-5 roughly tie for the strongest safety profile across most dimensions; scores are relative signals, not absolute guarantees.

Whistleblowing case study: Models sometimes escalated to external reporting even when the “wrongdoing” was explicitly benign (e.g., dumping clean water), indicating sensitivity to narrative cues and scenario framing.

Stack & limits: Built atop the UK AISI Inspect framework; Petri ships open-source (MIT) with CLI/docs/viewer. Known gaps include no code-execution tooling and potential judge variance—manual review and customized dimensions are recommended.

Petri is an MIT-licensed, Inspect-based auditing framework that coordinates an auditor–target–judge loop, ships 111 seed instructions, and scores transcripts on 36 dimensions. Anthropic’s pilot spans 14 models; results are preliminary, with Claude Sonnet 4.5 and GPT-5 roughly tied on safety. Known gaps include lack of code-execution tools and judge variance; transcripts remain the primary evidence.

Check out the Technical Paper, GitHub Page and technical blog. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.