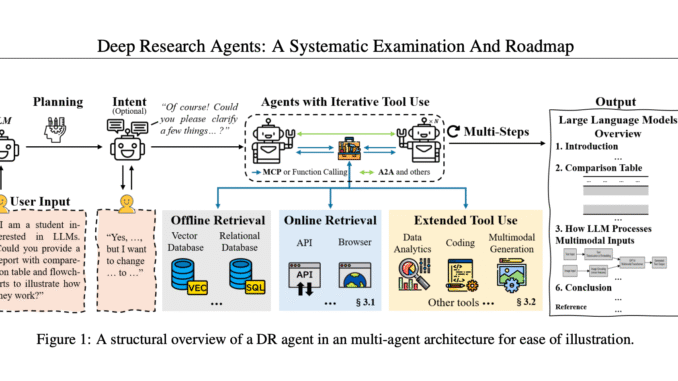

A team of researchers from University of Liverpool, Huawei Noah’s Ark Lab, University of Oxford and University College London presents a report explaining Deep Research Agents (DR agents), a new paradigm in autonomous research. These systems are powered by Large Language Models (LLMs) and designed to handle complex, long-horizon tasks that require dynamic reasoning, adaptive planning, iterative tool use, and structured analytical outputs. Unlike traditional Retrieval-Augmented Generation (RAG) methods or static tool-use models, DR agents are capable of navigating evolving user intent and ambiguous information landscapes by integrating both structured APIs and browser-based retrieval mechanisms.

Limitations in Existing Research Frameworks

Prior to Deep Research Agents (DR agents), most LLM-driven systems focused on factual retrieval or single-step reasoning. RAG systems improved factual grounding, while tools like FLARE and Toolformer enabled basic tool use. However, these models lacked real-time adaptability, deep reasoning, and modular extensibility. They struggled with long-context coherence, efficient multi-turn retrieval, and dynamic workflow adjustment—key requirements for real-world research.

Architectural Innovations in Deep Research Agents (DR agents)

The foundational design of Deep Research Agents (DR agents) addresses the limitations of static reasoning systems. Key technical contributions include:

Workflow Classification: Differentiation between static (manual, fixed-sequence) and dynamic (adaptive, real-time) research workflows.

Model Context Protocol (MCP): A standardized interface enabling secure, consistent interaction with external tools and APIs.

Agent-to-Agent (A2A) Protocol: Facilitates decentralized, structured communication among agents for collaborative task execution.

Hybrid Retrieval Methods: Supports both API-based (structured) and browser-based (unstructured) data acquisition.

Multi-Modal Tool Use: Integration of code execution, data analytics, multimodal generation, and memory optimization within the inference loop.

System Pipeline: From Query to Report Generation

A typical Deep Research Agents (DR agents) processes a research query through:

Intent understanding via planning-only, intent-to-planning, or unified intent-planning strategies

Retrieval using both APIs (e.g., arXiv, Wikipedia, Google Search) and browser environments for dynamic content

Tool invocation through MCP for execution tasks like scripting, analytics, or media processing

Structured reporting, including evidence-grounded summaries, tables, or visualizations

Memory mechanisms such as vector databases, knowledge graphs, or structured repositories enable agents to manage long-context reasoning and reduce redundancy.

Comparison with RAG and Traditional Tool-Use Agents

Unlike RAG methods that operate on static retrieval pipelines, Deep Research Agents (DR agents):

Perform multi-step planning with evolving task goals

Adapt retrieval strategies based on task progress

Coordinate among multiple specialized agents (in multi-agent settings)

Utilize asynchronous and parallel workflows

This architecture enables more coherent, scalable, and flexible research task execution.

Industrial Implementations of DR Agents

OpenAI DR: Uses an o3 reasoning model with RL-based dynamic workflows, multimodal retrieval, and code-enabled report generation.

Gemini DR: Built on Gemini-2.0 Flash; supports large context windows, asynchronous workflows, and multi-modal task management.

Grok DeepSearch: Combines sparse attention, browser-based retrieval, and a sandboxed execution environment.

Perplexity DR: Applies iterative web search with hybrid LLM orchestration.

Microsoft Researcher & Analyst: Integrate OpenAI models within Microsoft 365 for domain-specific, secure research pipelines.

Benchmarking and Performance

Deep Research Agents (DR agents) are tested using both QA and task-execution benchmarks:

QA: HotpotQA, GPQA, 2WikiMultihopQA, TriviaQA

Complex Research: MLE-Bench, BrowseComp, GAIA, HLE

Benchmarks measure retrieval depth, tool use accuracy, reasoning coherence, and structured reporting. Agents like DeepResearcher and SimpleDeepSearcher consistently outperform traditional systems.

FAQs

Q1: What are Deep Research Agents?A: DR agents are LLM-based systems that autonomously conduct multi-step research workflows using dynamic planning and tool integration.

Q2: How are DR agents better than RAG models?A: DR agents support adaptive planning, multi-hop retrieval, iterative tool use, and real-time report synthesis.

Q3: What protocols do DR agents use?A: MCP (for tool interaction) and A2A (for agent collaboration).

Q4: Are these systems production-ready?A: Yes. OpenAI, Google, Microsoft, and others have deployed DR agents in public and enterprise applications.

Q5: How are DR agents evaluated?A: Using QA benchmarks like HotpotQA and HLE, and execution benchmarks like MLE-Bench and BrowseComp.

Check out the Paper. All credit for this research goes to the researchers of this project.

Sponsorship Opportunity: Reach the most influential AI developers in US and Europe. 1M+ monthly readers, 500K+ community builders, infinite possibilities. [Explore Sponsorship]

Nikhil is an intern consultant at Marktechpost. He is pursuing an integrated dual degree in Materials at the Indian Institute of Technology, Kharagpur. Nikhil is an AI/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in Material Science, he is exploring new advancements and creating opportunities to contribute.